The Action Selection Trail

For this experiment, we want to represent a simple Action Selection agent. We want to define an experiment that demonstrates an autonomous agent that has the ability to make decisions based solely on sensors. To define the experiment, we were inspired by (Braitenberg, 1986), specifically the proposed vehicle 3c:

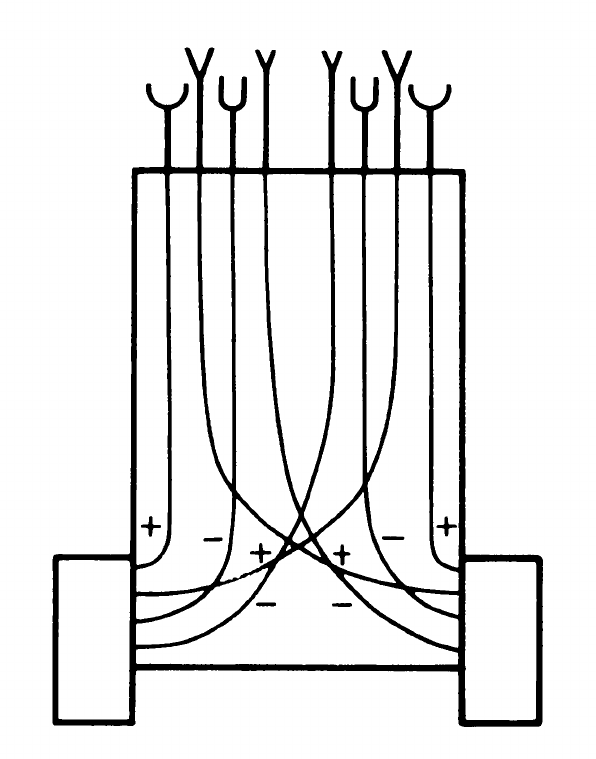

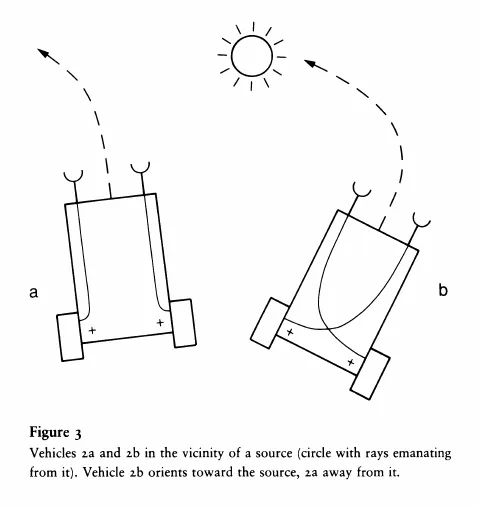

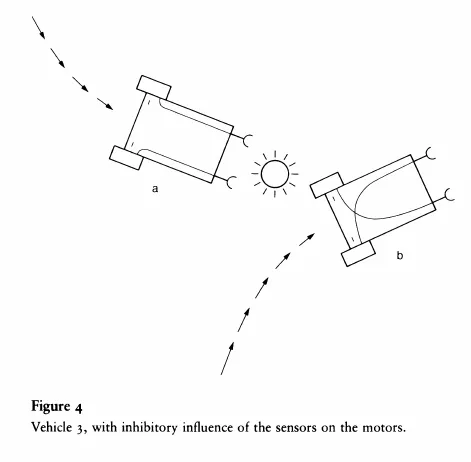

The vehicle can have several dependency rules, as long as they are of the following nature: "the more, the more" or "the more, the less." In other words, the higher the value captured by the sensor, the higher the wheel speed. Or vice versa, the higher the value, the lower the wheel speed.

This can be done directly/parallel, so that the right sensor is connected to the right wheel, i.e., on the same side. Or in an opposite/crossed fashion, where the right sensor connects to the left wheel, on opposite sides.

The expected behaviors are:

- "The more, the more": Vehicle 2a - Coward (run away) and Vehicle 2b - Aggressive (destroy)

- "The more, the less"/inhibitory: Vehicle 3a - Love (approach and admire while standing still) and Vehicle 3b - Explorer (admire but seek other sources)

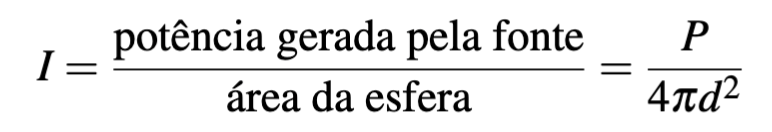

To create a "simulated" light sensor, we used the positions of the light point and two points on the front of the agent, on the right and left sides, simulating the light sensors. We obtained the light intensity using the formula below, which relates light intensity to distance. Although it doesn't look exactly like the examples from (Braitenberg, 1986), as was our initial goal, this change allows us to adapt it to use the proximity of other objects besides the light as a sensor measurement, thus having more possibilities for implementing behaviors (we could replace the light with a blue cube, for example, and use the same formula to create a blue cube sensor).

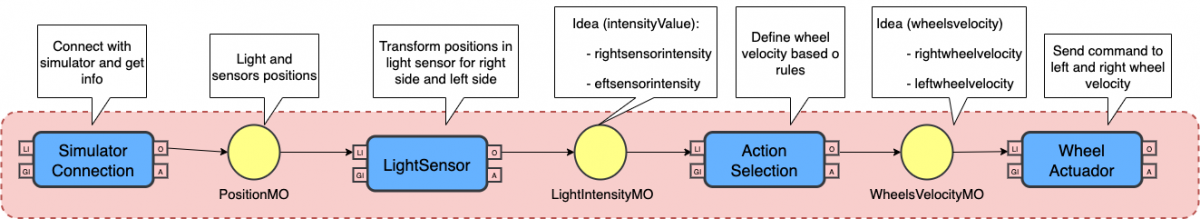

Thus, we have the CST architecture modeled in the figure below. The experiment implementation is available on GitHub in the lab at , thus demonstrating the use of Action Selection in an autonomous agent.

We have a codelet responsible for the connection with the CoppeliaSim Simulation, then a Memory object with information of light and sensors positions. Then a light sensor transforms the positions in intensity to create the values of the right and left side light intesities. Then the Action Selection implements the rules described, to define the wheels velocity based on the light intensity. This infos are stored in WheelsVelocity Memory Object, that will be acessed bt the Wheel Actuator codelet, that will send the command to the simulator for changing the wheels velocity.

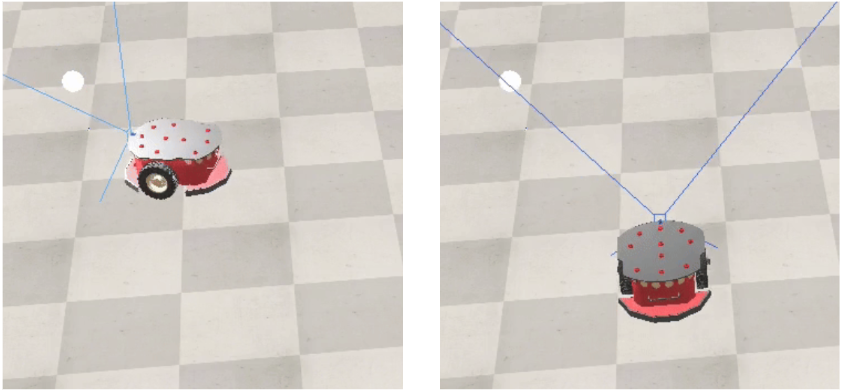

For implementation, we used a scene in CoppeliaSim (the scene can be found in the project repository) in which we have a central light point and a Pioneer 3-DX robot, which is located

in CoppeliaSim itself and has two wheels, one right and one left. This robot will be our agent controlled by the cognitive architecture. We implemented it according to the architecture in above for each of the dependency rules between sensor information and the wheel speed of the vehicles 2 and 3 and were able to observe the expected behaviors. In the figure below, we can see two frames from the simulator of an agent demonstrating the passionate behavior of the vehicle. It approaches and stops, admiring the light point.