The Behavior Model Trail

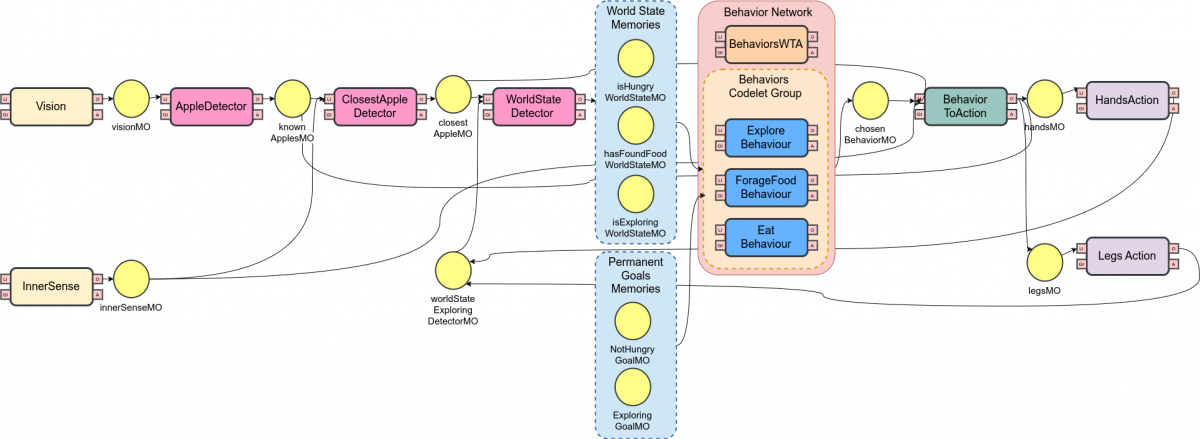

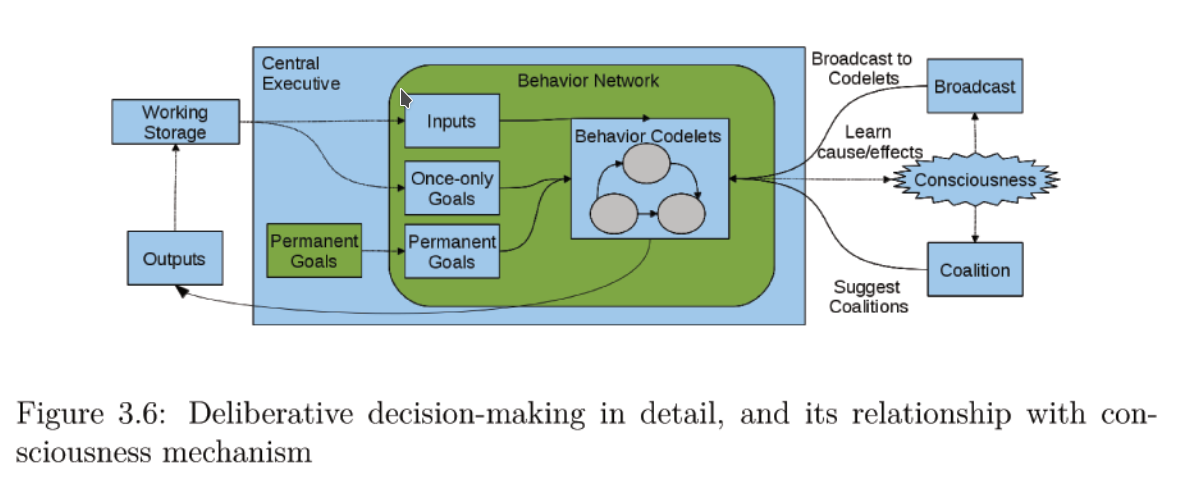

One of the most important goals any intelligent agent has is to decide which action to take next (Franklin, 1995). An action selection mechanism capable of both deliberative and reactive action selection called “behavior network” has been studied by Faghihi and Franklin (2012) and Dorer (2010), both based on the original work by Maes (1989). In this trail, we will study the implementation in CST of a Behavior Network modified by Klaus masther’s thesis (2015). This behavior network is able to make a decision on which behavior is more relevant at a given situation. This is the deliberative decision-making architecture:

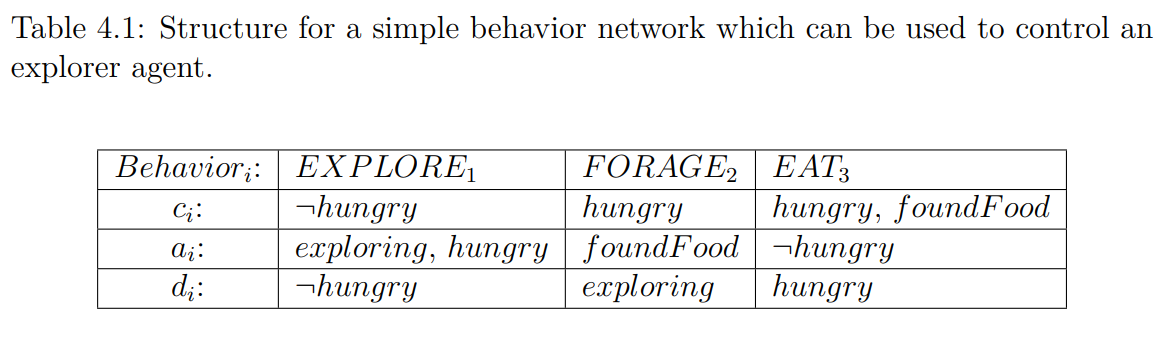

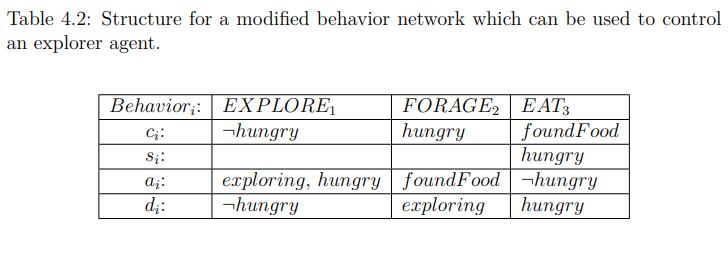

To define a Behavior network, we must define for each possible behavior, its preconditions list, add list and delete list. Here is an simple example:

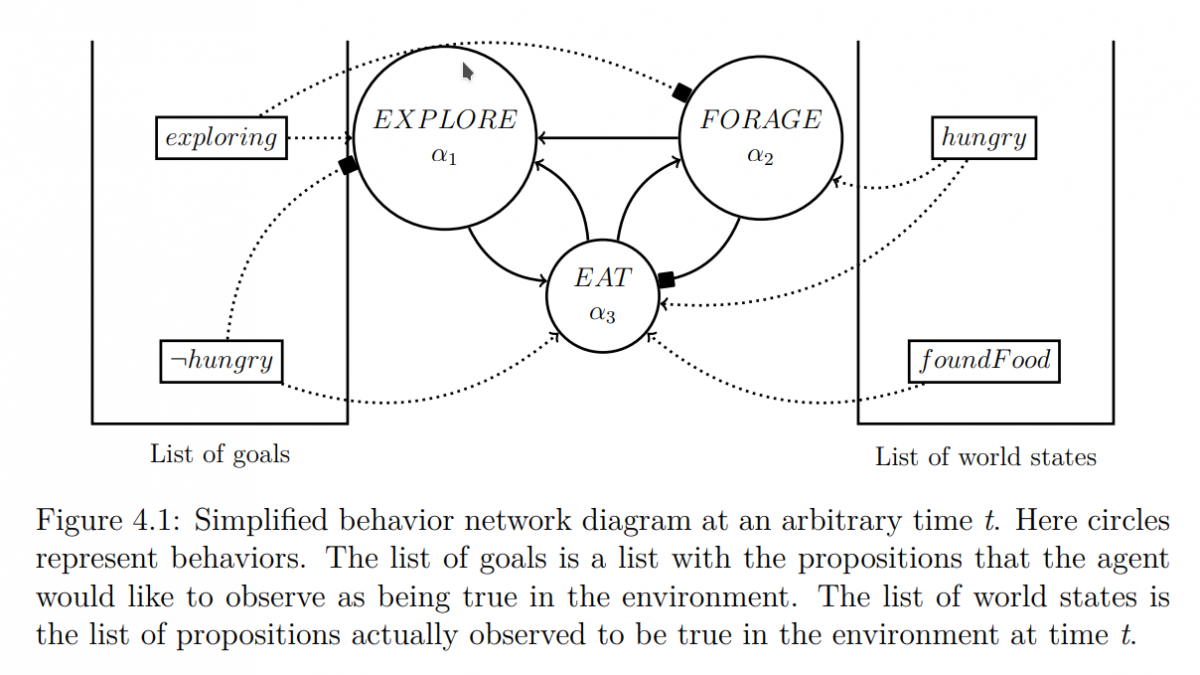

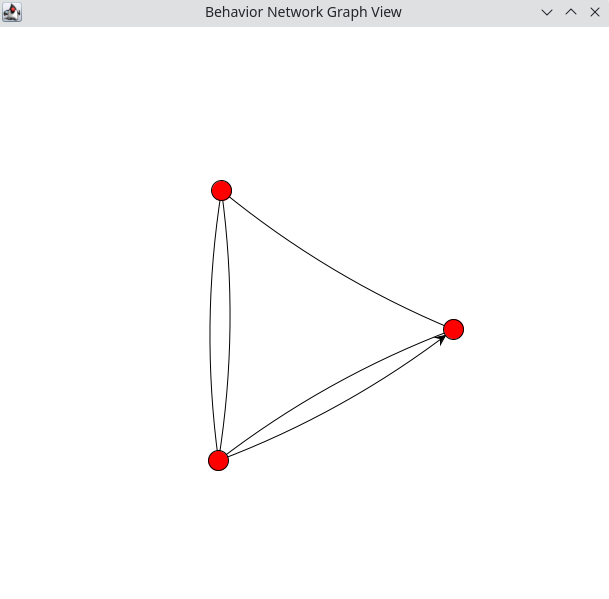

It is also important to keep in mind that in the original MASM a behavior can only become executable if, and only if, all of its preconditions are observed to be true at a time t. Also, there can be only one behavior active at any given time t. Given the aforementioned definition, behavior modules are, therefore, linked in a network through three types of links: successor links, predecessor links, and conflicter links. So, the behavior network can be represented as the list of goals, the list of world states and all the behaviors linked as susessors, predecessor and conflicters link, as we can observe in the figure:

To allow the behavior to be selected even if it hasn´t all the preconditions, Klaus modifications consists in adding a list of "soft-preconditions". The new term si, stands for a list of soft-preconditions. These soft-preconditions affect the flow of energy in the network the same way the original preconditions do, but do not block the behavior from being executed in case those conditions are not observed. In the example used, adding the soft preconditions we get:

Important definitions:

- Preconditions List: is a list of preconditions which must be fulfilled (observed to be true in the world) before the behavior can become active.

- Soft Preconditions List: work like the original preconditions list from MASM (Maes Action Selection Mechanism), but do not block a given behavior from becoming executable.

- Add List: is a list of propositions that are expected to become true after the given behavior gets executed.

- Delete List: holds a list of propositions that are expected to become false when the behavior gets executed.

- World State List: is the list of propositions actually observed by the agent to be true in the environment at time t.

- Goals List: propositions that the agent would like to observe as being true in the world.

- Successor links: There is a successor link from a behavior x to a behavior y, if a proposition in the add-list of x, also exists in the precondition list of y. That is, if the consequences of x cause the probability of y being chosen to increase.

- Conflicter links: There is a conflicting link from a behavior x to a behavior y, if a proposition from x's delete list is a proposition from y's precondition list. That is, if x is chosen, its consequences cause the probabilities of y being chosen to decrease.

Implementation of Behavior Network

For each behavior you must creat a class that extends the class Behavior. Here is an example of implementation of Eat Competence:

public class EatCompetence extends Behavior {

public EatCompetence(WorkingStorage ws,GlobalVariables globalVariables) {

super(ws, globalVariables);

}

@Override

public void operation() {

}

If this behavior is selected by Behavior Network’s BehaviorsWTA (Winner Takes All strategy) then its method operation() will be executed.

Implementation on Agent Mind

Define Working Storage (can be set as null if not used) and GlobalVaribles:

//Define working storage and global variables for competences

WorkingStorage ws = null;

GlobalVariables gv = new GlobalVariables();Initialize behavior

Behavior eatCompetence = new EatCompetence(ws, gv);

Behavior forageFoodCompetence = new ForageFoodCompetence(ws, gv);

Behavior exploreCompetence = new ExploreCompetence(ws, gv);Define Add List, Delete List, Preconditions List and Soft-Preconditions List:

This lists are compesed by an ArrayList of Memory. The Memories in this example are Memory Objects defined by a name and an Info. We decided to define the Info as Strings to be similar to our example in the table. And the names are "WORLD_STATE" so they can be compared to the World State informations.

String stateHungry = "hungry";

String stateNotHungry = "NOT_hungry";

String stateFoundFood = "foundFood";

String stateNotFoundFood = "NOT_foundFood";

String stateExploring = "exploring";

String stateNotExploring = "NOT_exploring";

Memory stateHungryMO = createMemoryObject("WORLD_STATE", stateHungry);

Memory stateNotHungryMO = createMemoryObject("WORLD_STATE", stateNotHungry);

Memory stateFoundFoodMO = createMemoryObject("WORLD_STATE", stateFoundFood);

Memory stateExploringMO = createMemoryObject("WORLD_STATE", stateExploring);

//Define HARD-PRE-CONDITIONS

ArrayList preconditionsListEat = new ArrayList();

preconditionsListEat.add(stateFoundFoodMO);

eatCompetence.setListOfPreconditions(preconditionsListEat);

//Define SOFT-PRE-CONDITIONS

ArrayList softPreconditionsListEat = new ArrayList();

softPreconditionsListEat.add(stateHungryMO);

eatCompetence.setSoftPreconList(softPreconditionsListEat);

//Define ADD-LIST

ArrayList addListEat = new ArrayList();

addListEat.add(stateNotHungryMO);

eatCompetence.setAddList(addListEat);

//Define DELETE-LIST

ArrayList deleteListEat= new ArrayList();

deleteListEat.add(stateHungryMO);

eatCompetence.setDeleteList(deleteListEat);Defining world state and goals

The competence must also receive the current world state, the only-one goals and permanent goals, they must be defined as Codelets Inputs. Similar to the figures example we define the goals as "Not hungry" and "Exploring". The world state informations will be received by the simulator connection, so they start withou any information.

Memory worldStateHungryMO;

Memory worldStateFoundFoodMO;

Memory worldStateExploringMO;

Memory goalNotHungryMO = createMemoryObject("PERMANENT_GOAL", stateNotHungry);

Memory goalExploringMO = createMemoryObject("PERMANENT_GOAL", stateExploring);

worldStateHungryMO = createMemoryObject("WORLD_STATE", "");

worldStateFoundFoodMO = createMemoryObject("WORLD_STATE", "");

worldStateExploringMO = createMemoryObject("WORLD_STATE", "");

//Define WORLD_STATE_PARAMETERS as inputs

eatCompetence.addInput(worldStateHungryMO);

eatCompetence.addInput(worldStateFoundFoodMO);

eatCompetence.addInput(worldStateExploringMO);

//Define GOALS

eatCompetence.addInput(goalNotHungryMO);

eatCompetence.addInput(goalExploringMO);

Define Behavior Network

After defining all the Behavior Codelets, define the BehaviorNetwork and add all the Behaviors. You can use the method setCoalition to define the consciousCompetences. To visualize the activation of the behaviors in the behavior network you can use BNMonitor and use as parameter the behavior network, the list of the name of all behaviors you want to visualize and the global variables. You can also visualize the Behavior Network graph using the BNPlot with behaviors list as parameter.

BehaviorNetwork bn = new BehaviorNetwork(this.getCodeRack(), ws);

bn.addCodelet(forageFoodCompetence);

bn.addCodelet(eatCompetence);

bn.addCodelet(exploreCompetence);

ArrayList<String> behaviorsIWantShownInGraphics = new ArrayList<>();

behaviorsIWantShownInGraphics.add(eatCompetence.getName());

behaviorsIWantShownInGraphics.add(forageFoodCompetence.getName());

behaviorsIWantShownInGraphics.add(exploreCompetence.getName());

BHMonitor bHMonitor = new BHMonitor(bn, behaviorsIWantShownInGraphics, gv);

insertCodelet(bHMonitor);

ArrayList<Behavior> behaviorList = new ArrayList();

behaviorList.add(eatCompetence);

behaviorList.add(forageFoodCompetence);

behaviorList.add(exploreCompetence);

bn.setCoalition(behaviorList);

BNplot bNplot = new BNplot(behaviorList);

bNplot.plot();

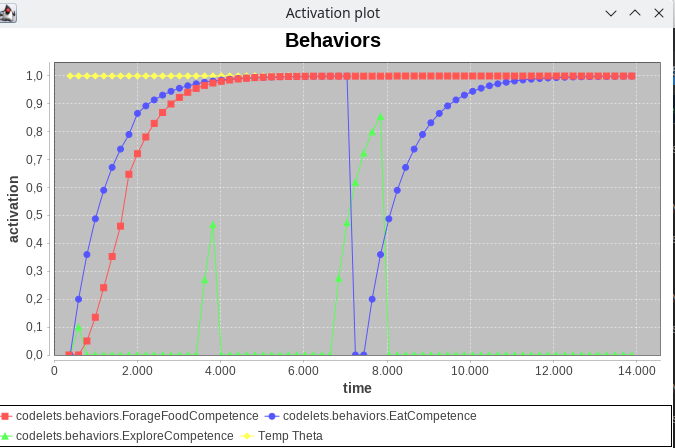

The figure shows an example of a Behavior Network Plot, showing the Behavior activation at each time:

The figure shows an example of a Behavior Network Plot, a graph representing the Behavior Network:

The Behavior Network adds a BehaviorsWTA as a Mind codelet, so its not necessary to start the behavior codelets individually. To start the code, you must use the start method in Mind class.

public class AgendMind extends Mind() {

public AgentMind(Environment env) {

...

start();

}

}We have implemented this example as a Demo in the repository: https://github.com/CST-Group/DemoBehaviorNetwork

In order to adapt to use the simulator, to detected the world state as required by the Behavior Network, we created a